React Tutorial#

This tutorial is a simple video-call application built with React that allows:

- Joining a video call room by requesting a token from any application server.

- Publishing your camera and microphone.

- Subscribing to all other participants' video and audio tracks automatically.

- Leaving the video call room at any time.

It uses the LiveKit JS SDK to connect to the LiveKit server and interact with the video call room.

Running this tutorial#

1. Run OpenVidu Server#

-

Download OpenVidu

-

Configure the local deployment

-

Run OpenVidu

To use a production-ready OpenVidu deployment, visit the official deployment guide.

Configure Webhooks

All application servers have an endpoint to receive webhooks from OpenVidu. For this reason, when using a production deployment you need to configure webhooks to point to your local application server in order to make it work. Check the Send Webhooks to a Local Application Server section for more information.

2. Download the tutorial code#

3. Run a server application#

To run this server application, you need Node installed on your device.

- Navigate into the server directory

- Install dependencies

- Run the application

For more information, check the Node.js tutorial.

To run this server application, you need Go installed on your device.

- Navigate into the server directory

- Run the application

For more information, check the Go tutorial.

To run this server application, you need Ruby installed on your device.

- Navigate into the server directory

- Install dependencies

- Run the application

For more information, check the Ruby tutorial.

To run this server application, you need Java and Maven installed on your device.

- Navigate into the server directory

- Run the application

For more information, check the Java tutorial.

To run this server application, you need Python 3 installed on your device.

-

Navigate into the server directory

-

Create a python virtual environment

-

Activate the virtual environment

-

Install dependencies

-

Run the application

For more information, check the Python tutorial.

To run this server application, you need Rust installed on your device.

- Navigate into the server directory

- Run the application

For more information, check the Rust tutorial.

To run this server application, you need PHP and Composer installed on your device.

- Navigate into the server directory

- Install dependencies

- Run the application

Warning

LiveKit PHP SDK requires library BCMath. This is available out-of-the-box in PHP for Windows, but a manual installation might be necessary in other OS. Run sudo apt install php-bcmath or sudo yum install php-bcmath

For more information, check the PHP tutorial.

To run this server application, you need .NET installed on your device.

- Navigate into the server directory

- Run the application

Warning

This .NET server application needs the LIVEKIT_API_SECRET env variable to be at least 32 characters long. Make sure to update it here and in your OpenVidu Server.

For more information, check the .NET tutorial.

4. Run the client application#

To run the client application tutorial, you need Node installed on your development computer.

-

Navigate into the application client directory:

-

Install dependencies:

-

Run the application:

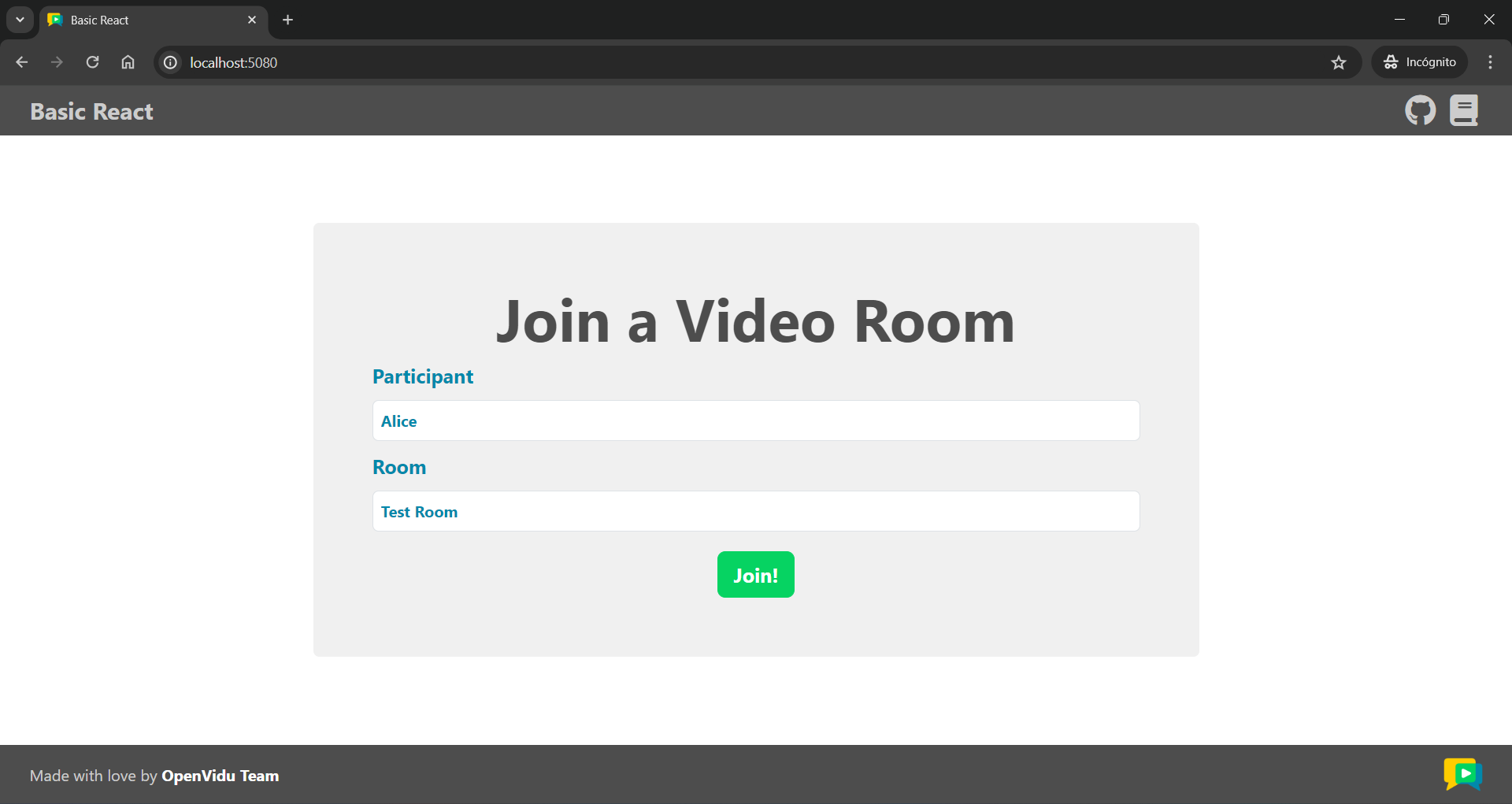

Once the server is up and running, you can test the application by visiting http://localhost:5080. You should see a screen like this:

Accessing your application client from other devices in your local network

One advantage of running OpenVidu locally is that you can test your application client with other devices in your local network very easily without worrying about SSL certificates.

Access your application client through https://xxx-yyy-zzz-www.openvidu-local.dev:5443, where xxx-yyy-zzz-www part of the domain is your LAN private IP address with dashes (-) instead of dots (.). For more information, see section Accessing your local deployment from other devices on your network.

Understanding the code#

This React project has been generated using the Vite. You may come across various configuration files and other items that are not essential for this tutorial. Our focus will be on the key files located within the src/ directory:

App.tsx: This file defines the main application component. It is responsible for handling tasks such as joining a video call and managing the video calls themselves.App.css: This file contains the styles for the main application component.VideoComponent.tsx: This file defines theVideoComponent. This component is responsible for displaying video tracks along with participant's data. Its associated styles are inVideoComponent.css.AudioComponent.vue: This file defines theAudioComponent. This component is responsible for displaying audio tracks.

To use the LiveKit JS SDK in a Vue application, you need to install the livekit-client package. This package provides the necessary classes and methods to interact with the LiveKit server. You can install it using the following command:

Now let's see the code of the App.tsx file:

| App.tsx | |

|---|---|

| |

TrackInfotype, which groups a track publication with the participant's identity.- The URL of the application server.

- The URL of the LiveKit server.

- The room object, which represents the video call room.

- The local video track, which represents the user's camera.

- The remote tracks array.

- The participant's name.

- The room name.

The App.tsx file defines the following variables:

APPLICATION_SERVER_URL: The URL of the application server. This variable is used to make requests to the server to obtain a token for joining the video call room.LIVEKIT_URL: The URL of the LiveKit server. This variable is used to connect to the LiveKit server and interact with the video call room.room: The room object, which represents the video call room.localTrack: The local video track, which represents the user's camera.remoteTracks: An array ofTrackInfoobjects, which group a track publication with the participant's identity.participantName: The participant's name.roomName: The room name.

Configure the URLs

When running OpenVidu locally, leave APPLICATION_SERVER_URL and LIVEKIT_URL variables empty. The function configureUrls() will automatically configure them with default values. However, for other deployment type, you should configure these variables with the correct URLs depending on your deployment.

Joining a Room#

After the user specifies their participant name and the name of the room they want to join, when they click the Join button, the joinRoom() function is called:

| App.tsx | |

|---|---|

| |

- Initialize a new

Roomobject. - Event handling for when a new track is received in the room.

- Event handling for when a track is destroyed.

- Get a token from the application server with the room name and participant name from the form.

- Connect to the room with the LiveKit URL and the token.

- Publish your camera and microphone.

The joinRoom() function performs the following actions:

-

It creates a new

Roomobject. This object represents the video call room.Info

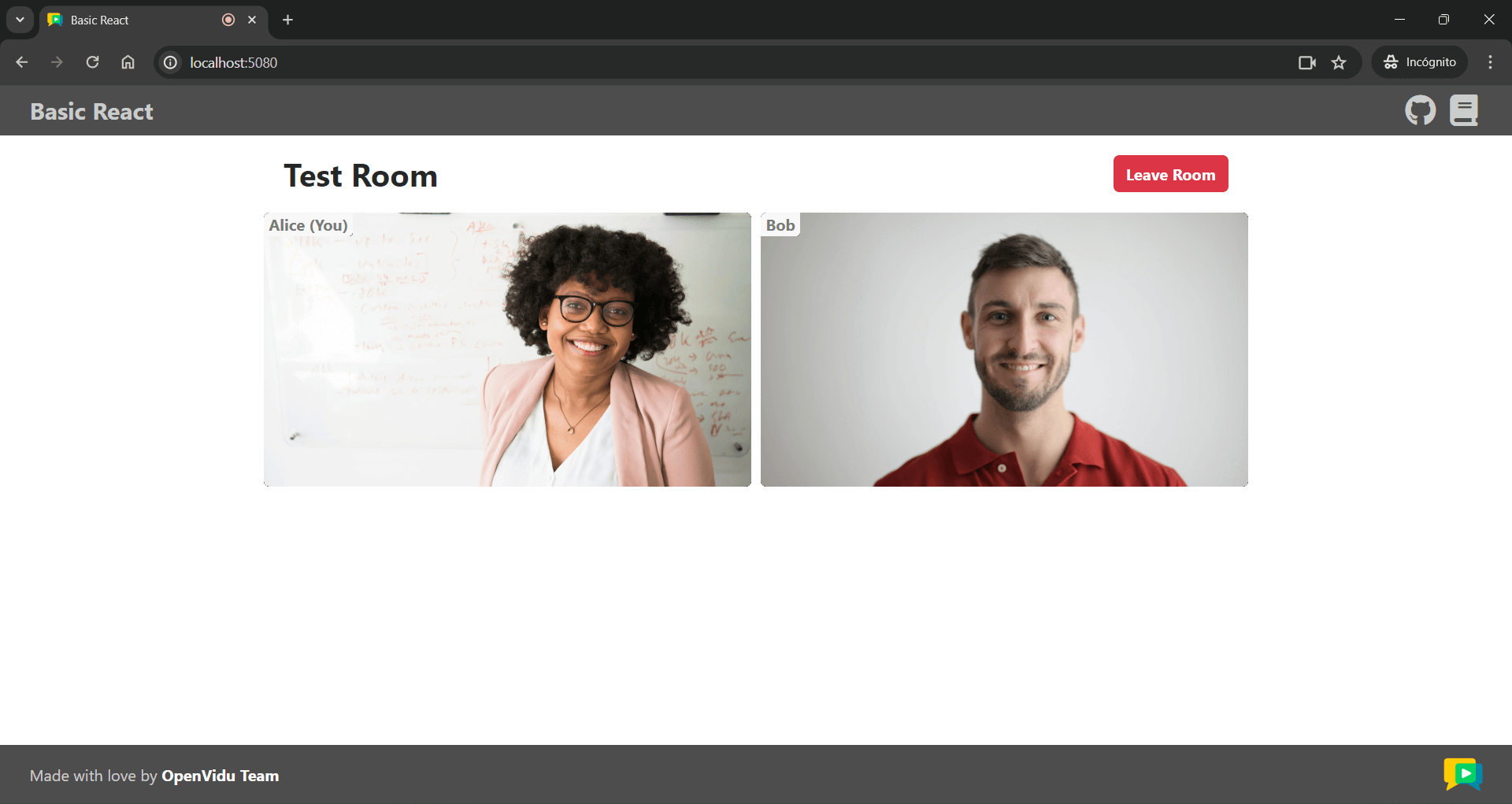

When the room object is defined, the HTML template is automatically updated hiding the "Join room" page and showing the "Room" layout.

-

Event handling is configured for different scenarios within the room. These events are fired when new tracks are subscribed to and when existing tracks are unsubscribed.

-

RoomEvent.TrackSubscribed: This event is triggered when a new track is received in the room. It manages the storage of the new track in theremoteTracksarray as aTrackInfoobject containing the track publication and the participant's identity. -

RoomEvent.TrackUnsubscribed: This event occurs when a track is destroyed, and it takes care of removing the track from theremoteTracksarray.

These event handlers are essential for managing the behavior of tracks within the video call. You can further extend the event handling as needed for your application.

Take a look at all events

You can take a look at all the events in the Livekit Documentation

-

-

It requests a token from the application server using the room name and participant name. This is done by calling the

getToken()function:App.tsx /** * -------------------------------------------- * GETTING A TOKEN FROM YOUR APPLICATION SERVER * -------------------------------------------- * The method below request the creation of a token to * your application server. This prevents the need to expose * your LiveKit API key and secret to the client side. * * In this sample code, there is no user control at all. Anybody could * access your application server endpoints. In a real production * environment, your application server must identify the user to allow * access to the endpoints. */ async function getToken(roomName: string, participantName: string) { const response = await fetch(APPLICATION_SERVER_URL + "token", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ roomName: roomName, participantName: participantName }) }); if (!response.ok) { const error = await response.json(); throw new Error(`Failed to get token: ${error.errorMessage}`); } const data = await response.json(); return data.token; }This function sends a POST request using

fetch()to the application server's/tokenendpoint. The request body contains the room name and participant name. The server responds with a token that is used to connect to the room. -

It connects to the room using the LiveKit URL and the token.

- It publishes the camera and microphone tracks to the room using

room.localParticipant.enableCameraAndMicrophone(), which asks the user for permission to access their camera and microphone at the same time. The local video track is then stored in thelocalTrackvariable.

Displaying Video and Audio Tracks#

In order to display participants' video and audio tracks, the main component integrates the VideoComponent and AudioComponent.

| App.tsx | |

|---|---|

| |

This code snippet does the following:

-

If the property

localTrackis defined, we display the local video track using theVideoComponent. Thelocalproperty is set totrueto indicate that the video track belongs to the local participant.Info

The audio track is not displayed for the local participant because there is no need to hear one's own audio.

-

Then, we iterate over the

remoteTracksarray and, for each remote track, we create aVideoComponentor anAudioComponentdepending on the track's kind (video or audio). TheparticipantIdentityproperty is set to the participant's identity, and thetrackproperty is set to the video or audio track.

Let's see now the code of the VideoComponent.txs file:

| VideoComponent.tsx | |

|---|---|

| |

- The video track object, which can be a

LocalVideoTrackor aRemoteVideoTrack. - The participant identity associated with the video track.

- A boolean flag that indicates whether the video track belongs to the local participant.

- The reference to the video element in the HTML template.

- Attach the video track to the video element when the component is mounted.

- Detach the video track when the component is unmounted.

The VideoComponent does the following:

-

It defines the properties

track,participantIdentity, andlocalas props of the component:track: The video track object, which can be aLocalVideoTrackor aRemoteVideoTrack.participantIdentity: The participant identity associated with the video track.local: A boolean flag that indicates whether the video track belongs to the local participant. This flag is set tofalseby default.

-

It creates a reference to the video element in the HTML template.

- It attaches the video track to the video element when the component is mounted.

- It detaches the video track when the component is unmounted.

Finally, let's see the code of the AudioComponent.tsx file:

| AudioComponent.tsx | |

|---|---|

| |

- The audio track object, which can be a

LocalAudioTrackor aRemoteAudioTrack, although in this case, it will always be aRemoteAudioTrack. - The reference to the audio element in the HTML template.

- Attach the audio track to the audio element when the component is mounted.

- Detach the audio track when the component is unmounted.

The AudioComponent is similar to the VideoComponent but is used to display audio tracks. It defines the track property as a prop for the component and creates a reference to the audio element in the HTML template. The audio track is attached to the audio element when the component is mounted and detached when the component is unmounted.

Leaving the Room#

When the user wants to leave the room, they can click the Leave Room button. This action calls the leaveRoom() function:

- Disconnect the user from the room.

- Reset all variables to their initial state.

The leaveRoom() function performs the following actions:

- It disconnects the user from the room by calling the

disconnect()method on theRoomobject. - It resets all variables to their initial state.